ELK = Elasticsearch, Logstash and Kibana

We are moving to Kubernetes with our applications. So I’ve installed Rancher Desktop om my laptop to get some hands-on experience. I’ll post my findings here.

When pods startup we can get the logs from the command line

kubectl logs <podname> -f

When pods go away we cannot get the logs from that pod anymore. This is by design and a solution is to collect the logs. For this I’m using fluent-bit to get the logs and ELK to store and view the logs.

Elasticsearch

First I’ll deploy Elasticsearch – I had to tune down the resources since my laptop has limited memory. Deployment and Service yaml below:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

component: elasticseach-logging

name: elasticsearch-logging

spec:

selector:

matchLabels:

component: elasticsearch-logging

template:

metadata:

labels:

component: elasticsearch-logging

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.17.16

env:

- name: discovery.type

value: single-node

ports:

- containerPort: 9200

name: http

protocol: TCP

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

spec:

type: NodePort

selector:

component: elasticsearch-logging

ports:

- port: 9200

targetPort: 9200

After deployment the container will need some time to start. Just let it do it’s thing.

Fluent-bit

Fluent-bit is the part that collects the logs from disk and sends them to some storage solution. We’ll let it send the logs to Elasticsearch. For this I use the helm chart from https://github.com/fluent/helm-charts. The default values.yaml needs to be edited to send the logs to the correct address. Changes shown. below.

outputs: |

[OUTPUT]

Name es

Match kube.*

Host elasticsearch-logging.logging.svc.cluster.local

Logstash_Format On

Retry_Limit 5

Now the logs are send to Elasticsearch it is time to view them.

Kibana

The visualisation tool from elastic.co is Kibana. Again I’ll deploy this linking to Elasticsearch. Deployment, Service and Ingress yaml below:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

component: kibana

name: kibana

spec:

selector:

matchLabels:

component: kibana

template:

metadata:

labels:

component: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.17.16

env:

- name: ELASTICSEARCH_HOSTS

value: '["http://elasticsearch-logging:9200"]'

ports:

- containerPort: 5601

name: http

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

spec:

type: NodePort

selector:

component: kibana

ports:

- port: 5601

targetPort: 5601

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kibana

spec:

ingressClassName: nginx

rules:

- host: kibana.localdev.me

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kibana

port:

number: 5601

I’m using an ingress to get to the Kibana UI. The domain localdev.me points to 127.0.0.1 and is free to use. Works great.

Viewing the logs

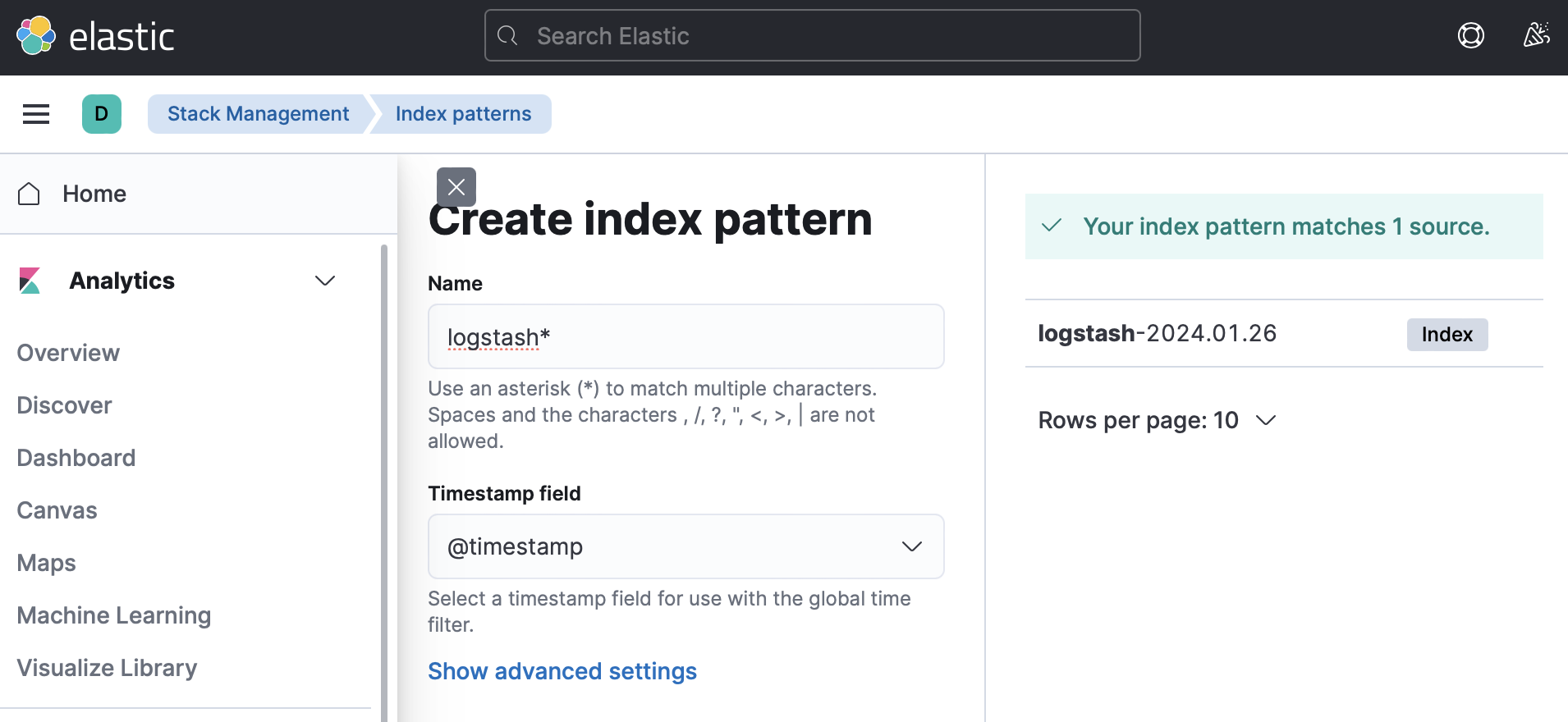

On first opening the Kibana UI it notifies me about creating an index pattern. Just follow along and create the index with logstash and @timestamp.

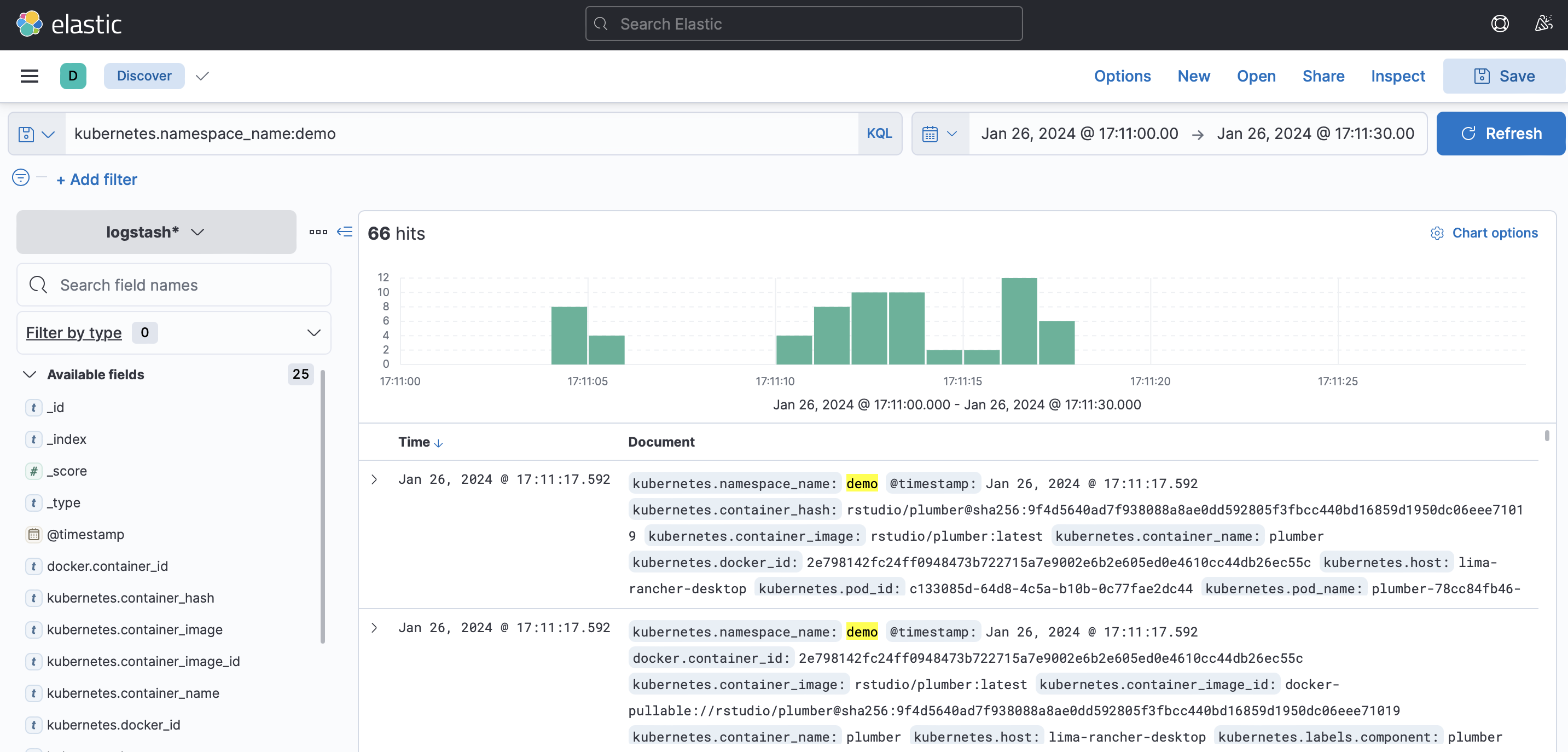

Now I can filter the logs on the namespace containing my demo applications (Nginx website and Rstudio plumber webapi) and view the logs.

Logs are saved to Elasticsearch and can be viewed after the pod is removed.